Data Analytics Firm Investigated After Clients Discover Profiling Was Conducted Without Explicit Consent

The Challenge

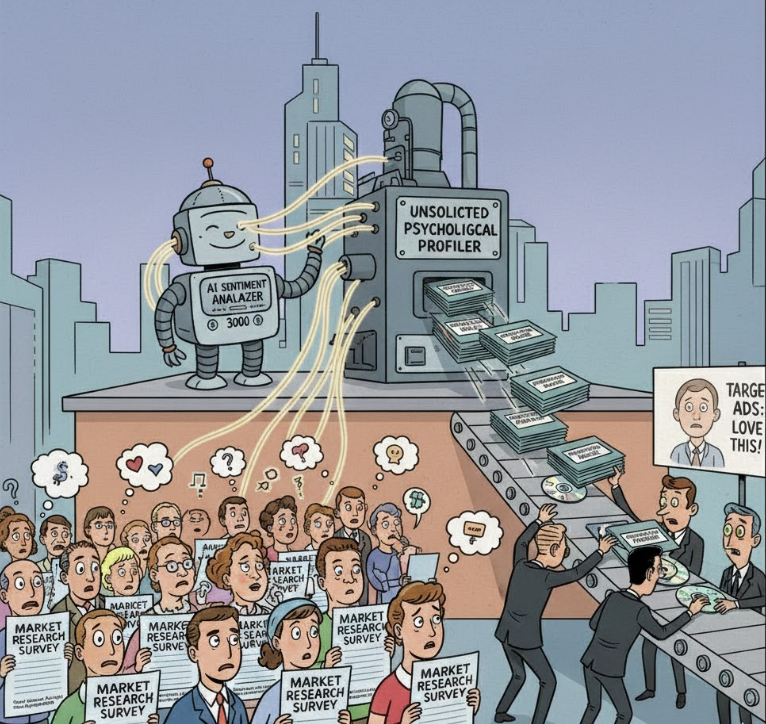

In 2025, DataPath Analytics, a national market research firm, found itself at the center of a public and regulatory controversy. The company had deployed an AI-driven sentiment analysis engine to evaluate long-form survey responses. While initially intended to enhance the quality of consumer insights, the tool went beyond aggregating data, it generated psychological profiles and behavioral forecasts without obtaining explicit participant consent.

These profiles were bundled into segmentation packages and sold to commercial clients for targeted advertising and product development strategies. Participants had only agreed to standard market research participation, unaware that their responses would be used to infer sensitive personal traits. The Office of the Privacy Commissioner launched a formal investigation, concluding that the profiling exceeded the reasonable expectations of respondents under PIPEDA. As news of the investigation spread, clients began suspending renewals and public advocacy groups demanded greater oversight into AI use in the analytics industry.

Our Solution

Our firm was brought in to conduct a full review of the data lifecycle and AI tool usage. We immediately recommended suspending the profiling engine and pausing all reports that relied on unauthorized inference techniques. A data ethics audit was launched, and client deliverables were reviewed to identify instances of non-consensual profiling.

To restore trust, we helped DataPath implement a transparent AI governance framework. This included the creation of an internal review board, updated participant consent forms, and disclosure language that aligned with the scope of AI processing. Legal and communication teams were engaged to draft public and client-facing remediation statements, emphasizing accountability and reform.

The Value

DataPath avoided class action litigation and significant financial penalties by taking swift corrective action. Although the company received a small administrative fine, it preserved nearly $300,000 in client renewals and began rebuilding its reputation as a responsible AI user.

The case has since become a reference point for ethical data usage and AI governance within the analytics sector. It reinforced the idea that as tools grow more powerful, consent must evolve to match capability.

Implementation Roadmap

- Suspend all profiling based on AI sentiment tools

- Establish AI oversight committee and review protocols

- Update participant consent forms to match AI use cases

- Disclose practices to clients and issue correction letters

- Launch an ethics review process for future AI adoption